Creating your machine learning model with Python is always a good idea since this language is the top choice for ML-programming. What’s more, it is supported by an extensive number of libraries and other tools that make the development process easier, seamless, and enjoyable. Natural language processing often comes as one of the essential capabilities of machine learning models, so choosing the right library for creating the necessary features for speech recognition and processing is quite important. So, what is the best Python NLP library for your project? Let’s compare the alternatives and find the right answer.

NLP Library Explanation

To accurately explain the essence of NLP libraries, it makes sense to start with an explanation of NLP (Natural Language Processing), then move to the concept of a library in the field of programming, and thus, come up with a comprehensive definition.

According to research, “The term Natural Language Processing encompasses a broad set of techniques for automated generation, manipulation, and analysis of natural or human languages. Although most NLP techniques inherit largely from Linguistics and Artificial Intelligence, they are also influenced by relatively newer areas such as Machine Learning, Computational Statistics, and Cognitive Science.”

As for the definition of a library in programming, its name already hints at the essence. A library is a set of functions, objects, or pieces of code you may reuse in your future application. Indeed, this collection of functions is quite standardized; thus, these are functions necessary for your app to work. However, the use of libraries significantly streamlines the development process.

So, the natural language processing library definition may be explained as a collection of the predeveloped functions necessary to empower your future app with language recognition, processing, and analysis capabilities. As for the Python NLP library, there are such functions as tokenizing, part of speech tagging, parsing, sentiment analysis, topic modeling and segmentation, named object recognition, data cleaning, searching and finding similar words, and machine translation.

Python for Natural Language Processing

Python as a programming language is excellent on its own. It is the easiest language to learn and is ideal to start your programming journey. Further, Python allows you to create a very clean, simple, and easy-to-understand code. In general, even a person who has not previously encountered technical aspects of programming can understand code written in Python.

For the same reason, this language is ideal for creating machine learning models. The developers teach their models to think the way people think, and the model understands Python code without difficulty.

In addition, this language has a vast number of libraries and other tools for creating machine learning models. This makes the development process fast and efficient. The capabilities of these tools don't end with Python modules for NLP. There are also many libraries for image processing, handwriting recognition, neural network development, data analysis, complex computation, predictive analytics, etc.

Thinking about machine-learning project development? You are on the right track since such apps are in great demand among private and corporate users. Reach out to us to get professional assistance with choosing the best tools and cost-effective creation of your future solution.

Contact UsTop Python NLP Libraries

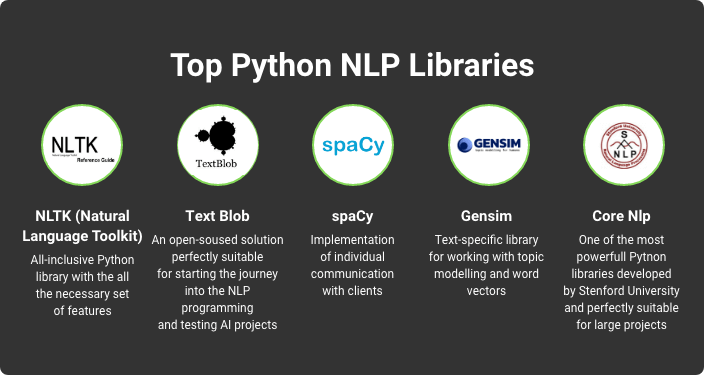

Below, we will overview the most popular and efficient libraries used in Python-powered machine learning projects with speech recognition and processing features.

1. NLTK (Natural Language Toolkit)

Pros | Cons |

|

|

According to research, “Although Python already has most of the functionality needed to perform simple NLP tasks, it’s still not powerful enough for most standard NLP tasks. This is where the Natural Language Toolkit (NLTK) comes in.”

This is a fairly extensive library that provides access to all the basic functions necessary for recognizing user speech. Also, the functionality for the development of a user-friendly chatbot can be used. This library is great for small and large projects, including those planning to enter international markets and speak with users in their languages.

Features:

Tokenizing (restructuring the sentence into understandable components)

Part of speech tagging to conclude on the meaning of the sentence depending on its lexical structure and grammatical rules

Sentiment analysis (for example, for distinguishing between positive and negative reviews)

Corpora access

Chatbot development features with advanced functions for users intentions analysis

Text classification

Parsing tree.

Example - Predicting words using NLTK

Resources:

Getting Started on Natural Language Processing with Python. This is easy-to-understand introductory research that will help you learn the basic terms of NLP and determine how NLTK for Python may be used in practice.

nltk.org. This is an official resource with extensive documentation, how-tos, guides, and FAQs.

2. TextBlob

Pros | Cons |

|

|

This open-source library will be the right choice in two cases. It is a great option for newbies looking to test out basic speech recognition functions and a suitable library to launch a pilot project since the built-in functionality makes initial prototyping a lot easier.

Features:

Speech parts tagging

Pluralization

Summarizing

WordNet integration

Synonimizing

Parsing

Sentiment analysis

Tokenization

Machine translation powered by Google Translate

Example - Finding the text’s sentiment score

Resources:

TextBlob Documentation. Here is the link to the official documentation. In fact, the presence of this well-structured and logical guide makes learning this tool easy and enjoyable.

Natural Language Processing Basics with TextBlob. This is the ultimate research explaining and demonstrating how each feature of the library works in practice.

3. spaCy

Pros | Cons |

|

|

This is a new but powerful library with the main goal of productivity and performance - both on the side of the tool itself and the Python developers who use it. As far as the feature set is concerned, it is centered around broader machine learning capabilities. Neural networks are used to train models, which is only one of this library’s significant advantages. Besides, this tool boasts the fastest semantic parser on the market.

Features:

Autocomplete and autocorrect

Sentiment analysis

Summarization

Build-on word vectors

The highest-end tokenization

Deep learning integration

Dependency parsing.

Example - Word vector representation

Resources:

spaCy official website. Make sure to visit it to get a quick overview of the features available and learn the benefits this tool may offer to your future project.

Intro to NLP with SpaCy. This is the overview of the basic features with the pieces of code embedded.

4. Gensim

Pros | Cons |

|

|

This is a highly specialized tool that focuses on working with text only. The two strongest capabilities of this library are topic modeling and word vectors. The tool allows for similarities and related documents finding, accurate summarizing, and large NLP models training.

Features:

Latent Semantic Analysis (LSA/LSI/SVD)

Latent Dirichlet Allocation (LDA)

Random Projections (RP)

Hierarchical Dirichlet Process (HDP) or word2vec deep learning

Example - Creating the corpus

Resources:

Gensim documentation. Surely, you should review it. However, the documentation isn’t enough to understand all the features and the ways to use them.

Gensim on GitHub - This is the official representation of the tool on GitHub.

Gensim Tutorial – A Complete Beginners Guide. This guide contains answers to all the questions the developers who try the tool for the first time may have. Feel free to check it, analyze the code examples, and try the library on your own.

5. Core NLP

Pros | Cons |

|

|

If we compare CoreNLP with all the libraries we analyzed above, it has the widest set of powerful functions. Interesting fact! Stanford University developed the library. As for the special features, there are functions for extracting information from free access and the coreference resolution system. This library is ideal for projects that want the broadest possible set of functions that are completely ready for integration with a future application.

Features:

Tokenization and sentence structuring

POS tagging

Lemanization (returning a word to its dictionary form, for example, "called" - "call")

Named entities and personal names identification

Sentiment and relations analysis

Summarizing and annotating

Parsing

Pattern learning.

Example - Lemanization with Core NLP

Resources:

Core NLP on GitHub. Perhaps, there is no need for additional resources since the official documentation is contained right here. On this page, you may also find in-depth tutorials and competent answers to the possible questions.

Intro to Stanford’s CoreNLP for Pythoners. If you are completely new to CoreNLP or natural language processing libraries, get started with this professionally but simply written article. All the explanations and examples provided are quite clear even for people far from programming.

Conclusion

We have covered the main Python libraries for human speech recognition. As you can see, all of them contain basic functions such as tokenization, parsing, classification, and sentiment analysis. More advanced libraries can also be useful for neural network modeling and deep learning. Also, pay attention to the number of languages supported if you want your application to be convenient for users from different countries.

Our technical team has enough hands-on experience to recommend the best library for your project development after analyzing its specifics.

Contact Us

Unit 1505 124 City Road, London, United Kingdom, EC1V 2NX

Unit 1505 124 City Road, London, United Kingdom, EC1V 2NX

Comments

Leave a comment